Citizen-generated data (CGD) plays a crucial role in enhancing transparency and accountability in policymaking and service delivery, especially in resource-constrained environments. By empowering citizens to provide feedback on the issues that concern them, CGD offers ground-level insights that traditional, centralized data collection often overlooks. This empowers marginalised communities to voice their concerns and contributes to more inclusive and participatory governance models.

Project Name

Identifying barriers to investment tool (iBIT)

Objective

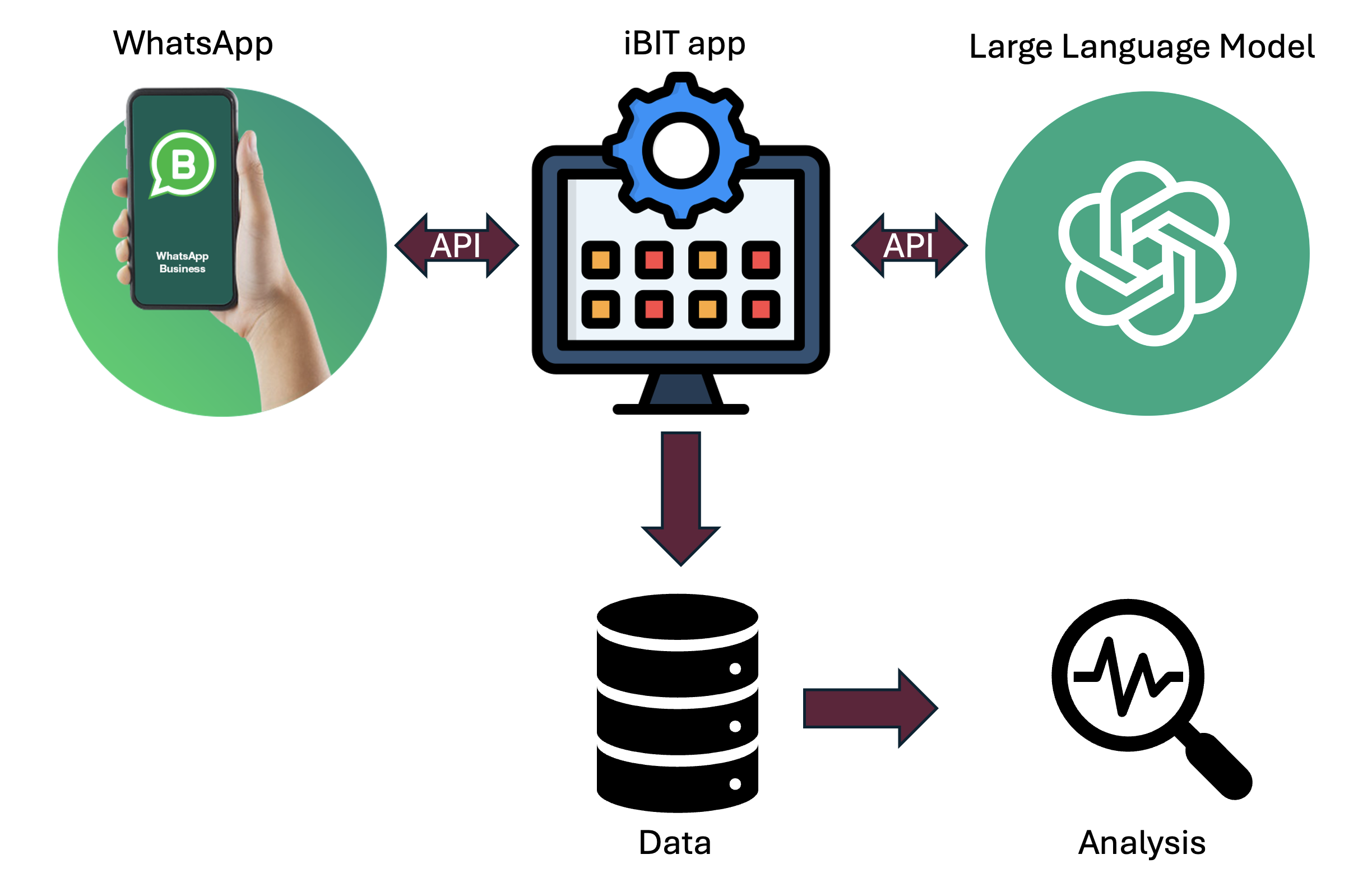

This project aims to leverage the widespread use of WhatsApp in South Africa to create a scalable and inclusive platform for citizens to provide feedback on the policies and regulations that prevent them from making or growing their investments. The collected data will be analysed using AI to generate insights and enable policymakers to address these issues with the aim of improving South Africa’s business environment.

The appeal of this concept lies in the integration of WhatsApp, a widely used communication platform, with a sophisticated Language Learning Model (LLM). This combination allows for the collection of rich, citizen-generated data in a familiar and accessible format, while also ensuring the data is interpreted and utilised effectively.

This concept creates a single platform to document investment barriers and thus potential areas for government intervention. It complements current datasets by creating an additional data source with which to cross-check existing sources. Additionally, this project makes close to real-time analysis of business representatives’ concerns possible.

All citizen-generated data will be de-identified, ensuring there are no privacy issues associated with the data collection.

Chatbot

To ensure maximum transparency and explainability, we explain how our chatbot works and how we ensure that your data is managed ethically and responsibly. The first thing to understand is that we have a conversation period, which runs from when the user initiates a conversation for 24 hours. During that time, we store each message as it comes in and keep a record of personal information such as the user’s WhatsApp number and the time of the conversation. Once the conversation period ends, we process this data and move it to a long-term database, de-identifying the data in the process. Thus, we do not retain your personal information.

How the conversation works

Users send a message on WhatsApp, which is forwarded to our application using WhatsApp’s Business Application Programme Interface (API). Once our application receives a message, the first thing we do is make sure that the user gives their informed consent so that we can collect their data, in accordance with the Protection of Personal Information Act. At any point during the conversation, users can send a quit response, at which point the conversation ends and all of the data they shared with us is deleted. If the user consents to us collecting their data, then we send their message to a Large Language Model that we have instructed to have a conversation with them, collecting information about the policy or regulation that acts as a barrier to investment. Once the LLM is satisfied that is has collected this information, the user will be sent some drop-down forms to complete, such as which sector they are in, the size of the business, and whether the barrier is national, provincial, or municipal in scale. This helps us to understand the context of the business and identify more meaningful, disaggregated trends. To improve the user experience and accessibility, users can chat in any language and use voice notes.

How the post-conversation processing works

Shortly after every conversation, the data in short-term memory is processed to remove personal information, stored in the long-term database, and then deleted. The de-identification process includes the following:

- The user’s WhatsApp number is deleted.

- The times of the conversation were aggregated to the week level. This reduces the ability to identify an individual or their location at a particular time.

- We use other AI techniques to remove personal information in the form of unique identifiers that may have been shared with us. This includes things like the names of businesses.

- The company whose LLM we use does not retain the data we send it long-term or use it for training their models.

- We have frequent human review of data to ensure that it does not contain personal information.

While these steps de-identify our collected data, it is not possible for us to guarantee that our data has been de-identified to the extent that it cannot be re-identified again, since we cannot control what information citizens choose to share. Thus we also comply with the POPI Act.

How we analyse the data

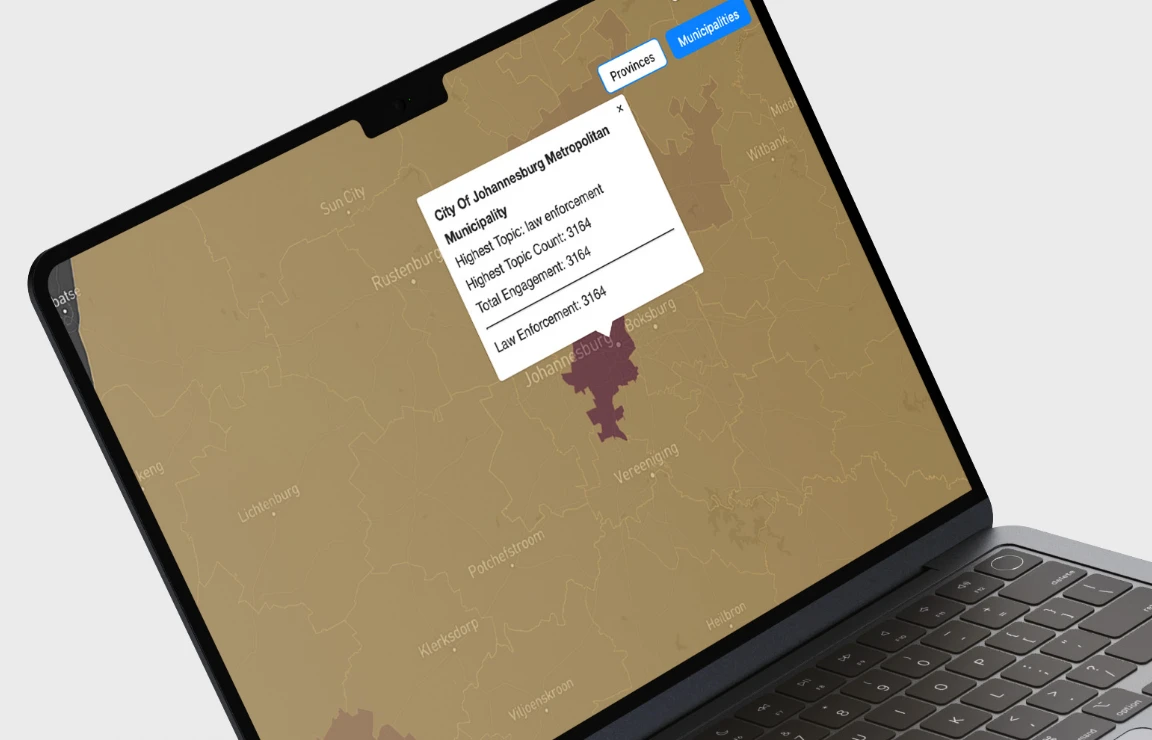

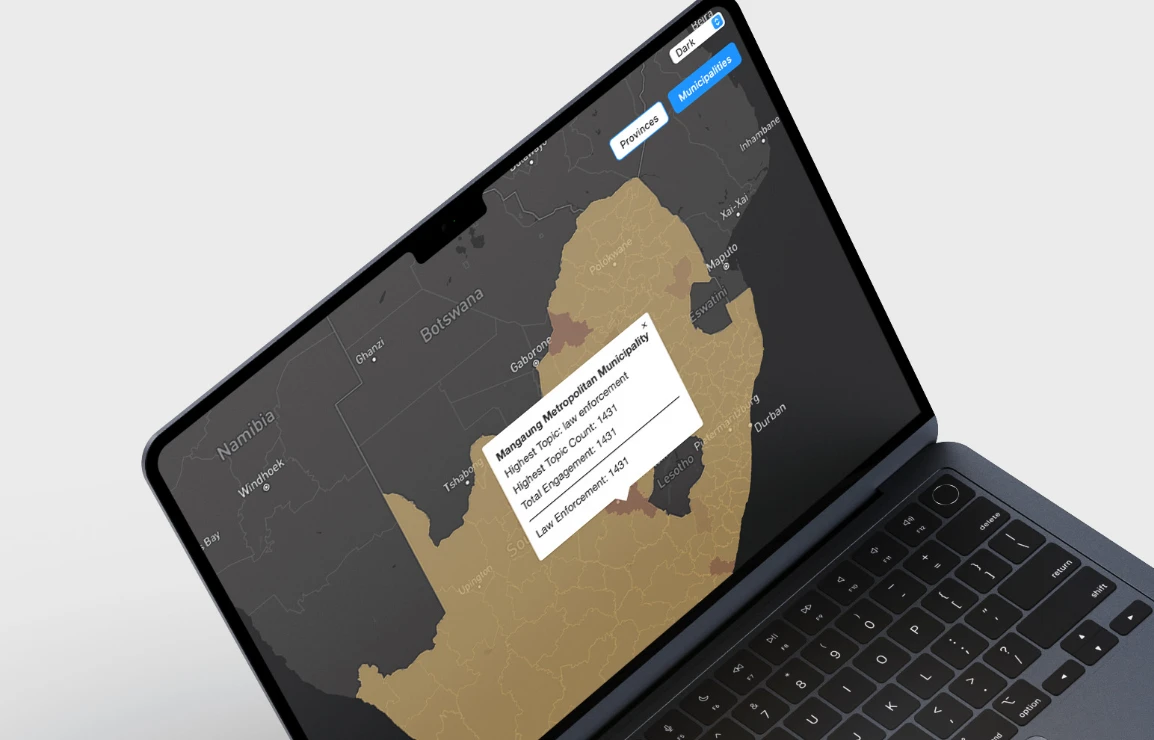

We can now analyse and visualise the data. We do this firstly by doing topic analysis. This AI method matches the content of messages to different service delivery topics, allowing us to classify the conversations. This allows us to display the data on an interactive map which allows users to determine the most pressing service delivery issues in each ward, local municipality, district municipality or province over time. By comparing wards, policymakers can better determine which policies will best meet the needs of the people in different areas. By comparing one region over time, one can see if the citizen complaints about one service delivery issue are decreasing or increasing. We also analyse the data using LLMs to generate summaries of the citizen conversations, again, filtered by region, time period, or service delivery issue.

Providing this close to real-time analysis will

- Help policymakers set policy agendas that better meet the needs of citizens in different regions and get early indicators of widespread service delivery issues.

- Empower citizens with information they can use to hold their government representatives accountable across different spheres of government.

- Enable businesses to identify markets in need of goods and services.